NEC Corporation (TSE: 6701) has enhanced and expanded the performance of its lightweight large language model (LLM) and is scheduled to launch it in the spring of 2024. With this development, NEC is aiming to provide an optimal environment for the use of generative artificial intelligence (AI) that is customized for each customer’s business and centered on a specialized model that is based on NEC’s industry and business know-how.

These services are expected to dramatically expand the environment for transforming operations across a wide range of industries, including healthcare, finance, local governments and manufacturing. Moreover, NEC will focus on developing specialized models for driving the transformation of business and promoting the use of generative AI from individual companies to entire industries through managed application programming interface (API) services.

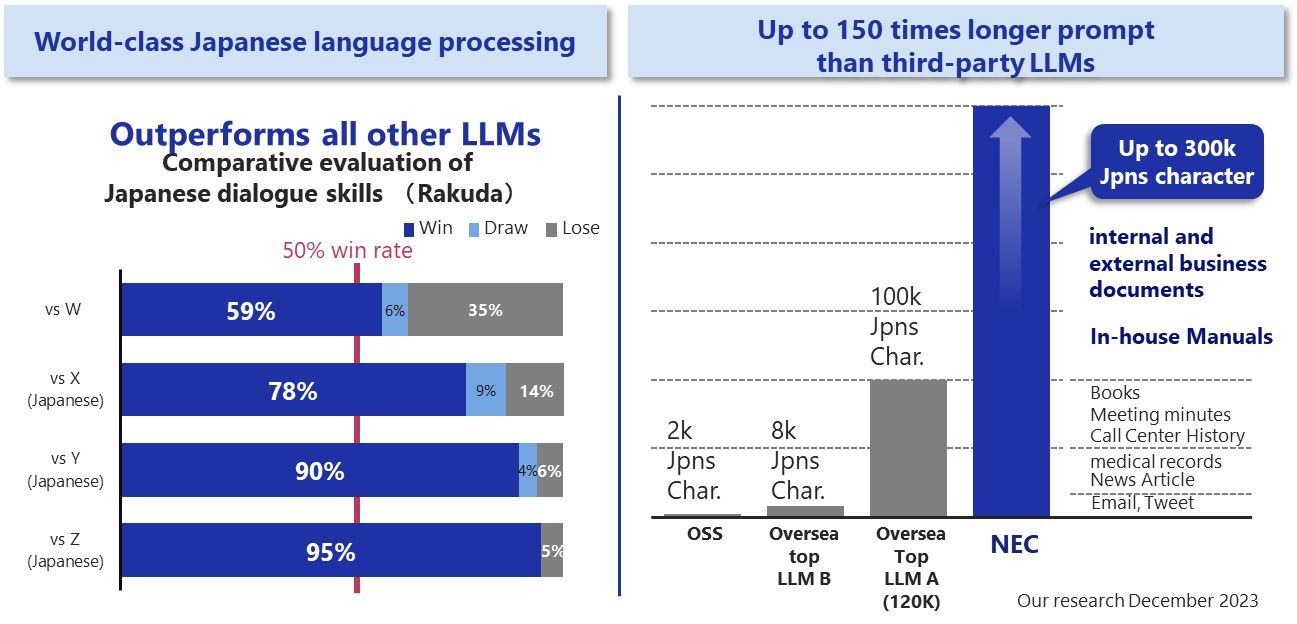

NEC has enhanced its LLM by doubling the amount of high-quality training data and has confirmed that it outperformed a group of top-class LLMs in Japan and abroad in a comparative evaluation of Japanese dialogue skills (Rakuda*). Furthermore, the LLM can handle up to 300,000 Japanese characters, which is up to 150 times longer than third-party LLMs, enabling it to be used for a wide range of operations involving huge volumes of documents, such as internal and external business manuals.

NEC is also developing a “new architecture” that will create new AI models by flexibly combining models according to input data and tasks. Using this architecture, NEC aims to establish a scalable foundation model that can expand the number of parameters and extend functionality. Specifically, the model size can be scalable from small to large without performance degradation, and it is possible to flexibly link with a variety of AI models, including specialized AI for legal or medical purposes, and models from other companies and partners. Additionally, its small size and low power consumption enable to be installed in edge devices. Furthermore, by combining NEC’s world-class image recognition, audio processing, and sensing technologies, the LLMs can process a variety of real-world events with high accuracy and autonomy.

In parallel, NEC has also started developing a large-scale model with 100 billion parameters, much larger than the conventional 13 billion parameters. Through these efforts, NEC aims to achieve sales of approximately 50 billion yen over the next three years from its generative AI-related business.

The development and use of generative AI has accelerated rapidly in recent years. Companies and public institutions are examining and verifying business reforms using various LLMs, and the demand for such reforms is expected to increase in the future. On the other hand, many challenges remain in its utilization, such as the need for prompt engineering to accurately instruct AI, security aspects such as information leakage and vulnerability, and business data coordination during implementation and operation.

Since the launch of the NEC Generative AI Service in July 2023, NEC has been leveraging the NEC Inzai Data Center, which provides a low-latency and secure LLM environment, and has been accumulating know-how by building and providing customer-specific “individual company models” and “business-specific models” ahead of the industry by using NEC-developed LLM.

NEC leverages this know-how to provide the best solutions for customers in a variety of industries by offering LLM consisting of a scalable foundation model and an optimal environment for using generative AI according to the customer’s business.

About the generative AI business strategy

1. Three-stage development of generative AI business through managed API services

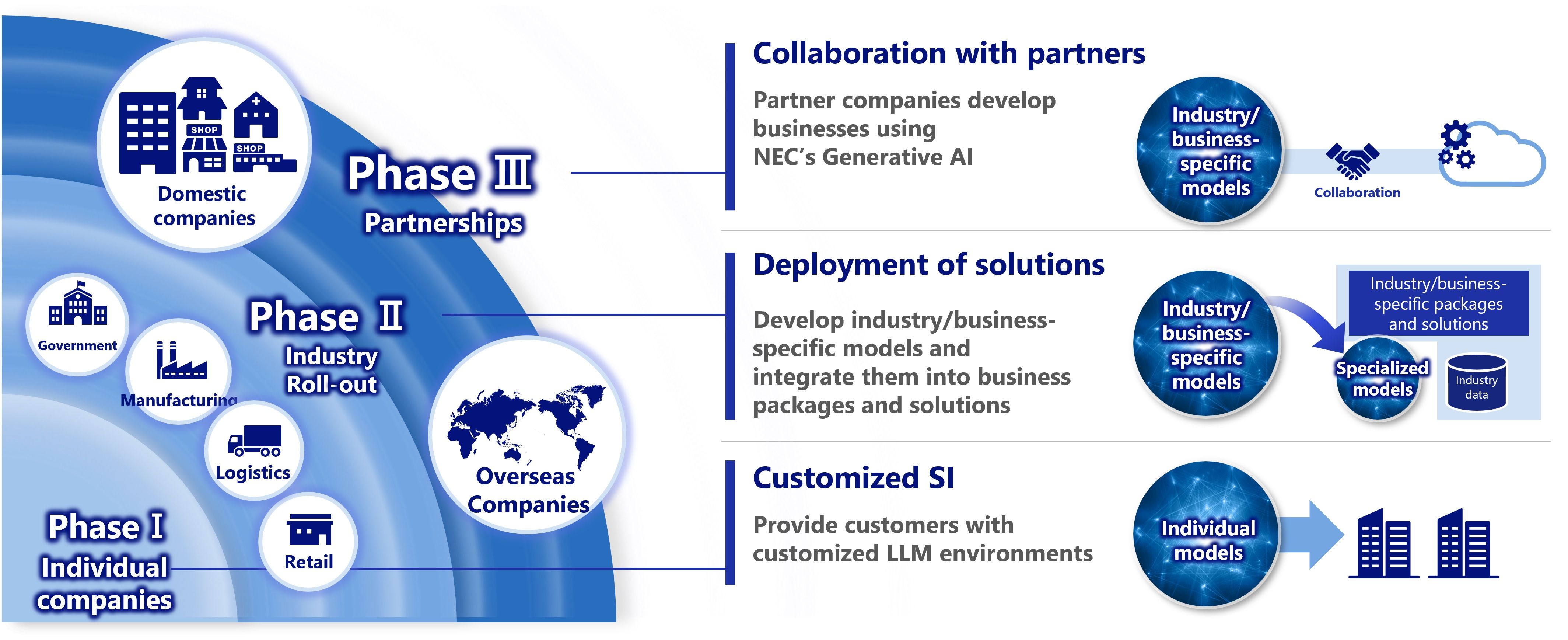

The Managed API service provides dialogue and search functions utilizing large-scale language models such as LLM developed by NEC. With this Managed API Service as the core, the generative AI business will be developed in three stages: (Phase 1) Building industry- and business-specific models targeting individual companies, (Phase 2) Incorporating industry- and business-specific models into business packages and solutions, and (Phase 3) Developing business packages and solutions through partnerships with companies.

2. Strengthening the structure of technology development and sales activities

In addition to the NEC Generative AI Hub, a specialized organization established directly under the Chief Digital Officer (CDO), and the Digital Trust Promotion Management Department, “ambassadors” have been established to promote the generative AI business from the corporate sales department. This will provide customers in various industries with the best possible generative AI solutions.

A new “Generative AI Centre” has also been established to oversee the generative AI technology that supports these activities. Approximately 100 leading-edge researchers in the area of generative AI from global research centers will be virtually integrated to accelerate the commercialization of research results in generative AI through seamless collaboration between research and business.

3. Partner collaboration for the provision of safe and reliable LLM

Looking to the future, NEC is promoting the LLM Risk Assessment Project in collaboration with Robust Intelligence to provide secure and reliable LLM.

NEC will provide industry- and business-specific models that have been risk assessed according to global standards.

While pioneering new AI innovations, NEC will continue to consult with customers to enhance value, including service and function expansion, and to provide safe and secure generative AI services and solutions for solving customer challenges.